AVVA - Accessible

Future for Self Driving Cars

traffic21,

carnegie mellon

Voice Interface that increases independence for visually impaired riders by enabling their use of autonomous vehicles

METHODS

Conversational user interface design, in-person ride-along & observation sessions, qualitative interviews, stakeholder interviews, academic research review, industry analysis

ROLE

Lead Conversation Designer, Researcher

[ 2 person team ]

TIMELINE

January - July 2018

PROBLEM

Visually impaired people cannot operate vehicles independently and have to rely on many varied sources of transportation, which are not suited to their needs and are unpredictable and limited use. Shared autonomy rideshares will be the best way mobility solution, but will not have any drivers to help users. What happens when there is no human driver?

Solution

AVVA (Autonomous Vehicle Voice Assistant) is a conversational user interface that provides independence, agency, and efficiency to visually impaired users by giving them a method to interact with a shared autonomy ride, pickup to drop-off.

outcome

The system is designed to be easily implementable into Lyft, Uber, or any other autonomous vehicle.

AVVA is built to WORK IN Any RIDESHARE INFRASTRUCTURE

Welcoming & initiating riders

AVVA welcomes a visually impaired user and introduces the system, with triggers and utterances. This information is available throughout the ride.

Partnering with users during the ride

AVVA helps with orienting, navigation, route options, and acts a concierge by narrating surroundings both inside and outside the vehicle.

Full service agent checkout & review

At the end of the ride, AVVA provides specific exit instructions, and a complete checkout and review process.

Process

Anticipating new tech accessibility regulations from the ADA and the Shared-Use Mobility Center, we focused on understanding current mobility issues through qualitative user research and observation. Mapping user’s needs and goals led to the design of the voice interface. The modular system was tested inside of a car with visually impaired users using Wizard of Oz.

Findings

The key to successful ride is for visually impaired users to have access to information about their drive and the system at a moment’s notice. Without clear information presentation and the agency to control their riding experience, safety and trust begin to erode.

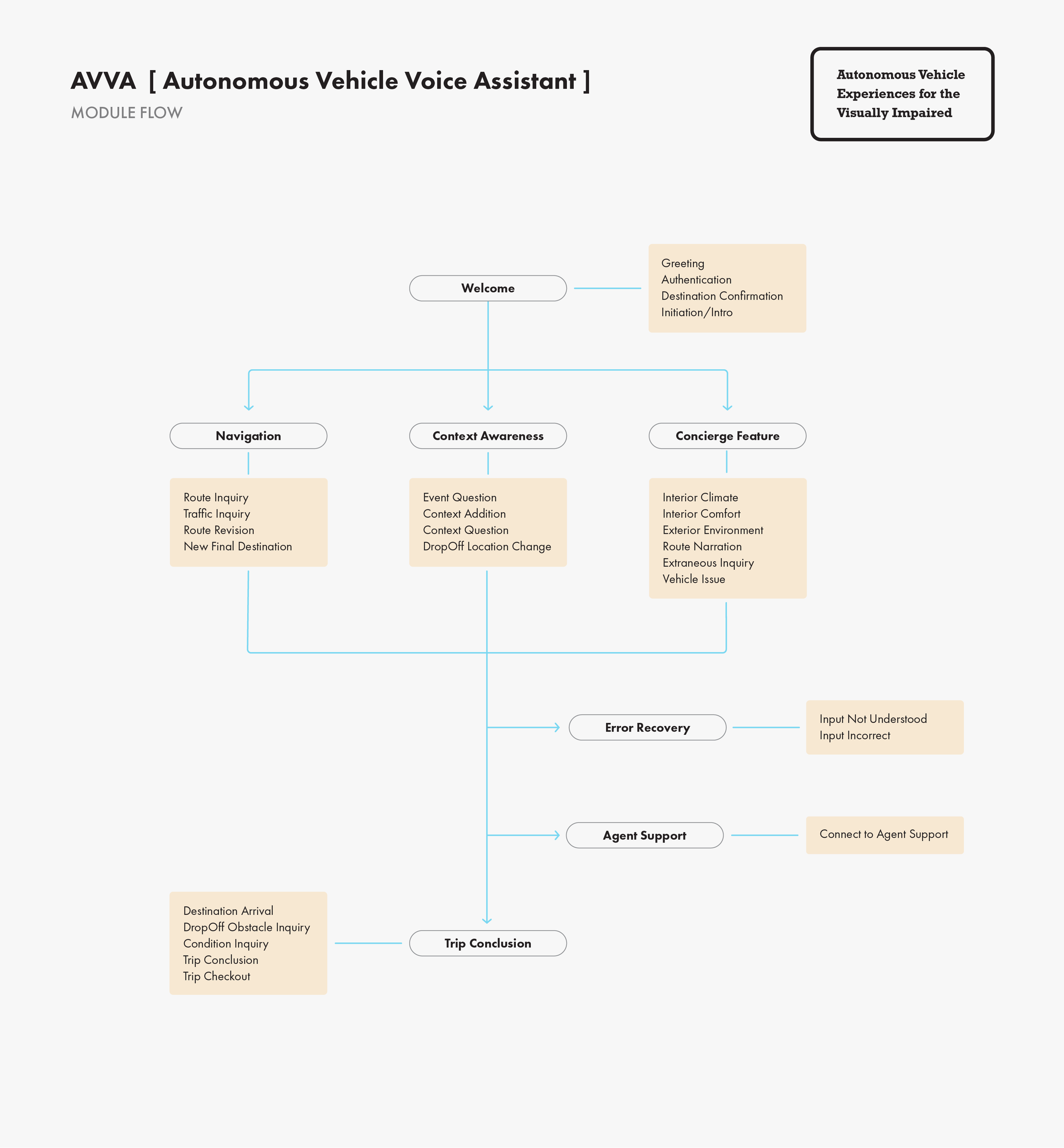

AVVA Conversation Design

USING SPEECH AS A GUIDE

Familiar and responsive

Speech is a familiar method of interaction. AVVA uses that to answer the unique questions of visually impaired riders, and present information in a way that allows users to customize and trust their ride - adapting to their needs over time.

Modular design system

The modular conversational voice interface guides a visually impaired user through the entire ride and is coded with JQuery based variables, events, and methods, ready to be deployed.

Welcome & Initiation

WARM & FRIENDLY TONE

Using advanced authentication AVVA verifies that they are the correct passenger, and are going to the right destination. Users can re-route or add details about their journey that are added to their preferences.

Route Awareness & Orientation

AGENCY THROUGH TRANSPARENCY

The user feels safe knowing that they have access to any kind of information they need - questions about the vehicle, and it’s response to route and road conditions. Navigation and wayfinding is descriptive and clear, giving just enough information without overwhelming the user. Re-routing is always an option, giving the user agency.

Concierge Partner

TRIGGERS FROM USERS, INTERNAL & EXTERNAL EVENTS

AVVA helps users within the cabin, making them feel comfortable and at the center of the experience by narrating their surroundings. Designed based on triggers from user utterances as well as internal and external events. Variables, events, and methods are coded into the responses.

Errors & Escalation

TRUST THROUGH ERROR HANDLING

AVVA works through errors with gentle clarifying questions and some levity to maintain the relationship. For personal and physical safety reasons, a customer support agent is available on demand for either further error handling and escalation.

Trip Conclusion: Review & Payment

RESPONSIVE TO UNIQUE NEEDS

At the end of the ride, AVVA notifies the user of their surroundings, and how to leave the vehicle. Context about the external environment is triggered both proactively and in response to questions. To conclude the full operations of the system, users can use AVVA to leave a ride review and pay for it right then and there.

Research & Analysis

Understanding a Unique Population

The most important goal for our research was to gain empathy with visually impaired people’s transportation experiences. We needed to understand what information is necessary during a ride, and what is the best way to present the data points. Through a partnership with Associated Services for the Blind in Philadelphia, we interviewed participants for phone, and in-person sessions.

Stakeholder Interviews

Extensive interviews with researchers, psychologists, linguists, and developers to understand existing accessible technology and it’s limitations.

Phone Interviews

Interviewed individuals about their current experience with using public transportation, paratransit, and taxi services.

In-Person Ride-Alongs & Interviews

Observed participants as they took a rideshare to a set destination, noting down all of the questions and issues that happened during the ride.

Users telling their own stories: YouTuber Joy Ross takes viewers through a ride in an Uber

Diving into the transportation experience

Through industry, academic, and stakeholder research we uncovered that most mobility focused technology isn’t meeting the needs of visually impaired people. Even though there are attempts to make accessible technology, it falls flat on providing useful information.

PRIMARY METHOD OF PERCEPTION IS AUDITORY

Other perception channels are limited in their use cases and are not flexible to many of the diverse environments.

TECH DEVELOPMENT ISN’T ACCOUNTING FOR ACCESSIBILITY

Most new future-looking product research is focused on getting the functionality to work, but it is not including an accessible feature set.

“SMART” TOOLS ARE MOSTLY USELESS

Technologically enhanced tools like smart canes, headphones, and goggles often transmit information that the user already knows.

Diagram illustrating all the needs and factors that a visually impaired person considers throughout a rideshare journey.

Keys to successful Information perception

We discovered three critical pieces of information that are required in order for visually impaired people to perceive their environment. Geographic information at street scale and within the confined space of the vehicle, understanding of their physical position in relation to their destination, and how info is presented about the context (exterior and interior).

1. NAVIGATION & WAYFINDING

Inconsistency in routes, features, and workarounds disrupts the traveller as they memorize paths.

2. ORIENTATION

Users rely on organic sensing to understand their environment (street noise, traffic, crowds, construction).

3. INFORMATION PRESENTATION

To be useful, information has to be available on demand, real time, and approachable with a familiar interaction pattern.

The three key factors to successful information perception are navigation & wayfinding, orientation, and information presentation.

Grounded theory allowed us to use our understanding of the interviews to see overarching themes in the data.

THE Fundamentals of a smooth trip

The interviews and ride-alongs yielded a set of crucial themes that are necessary for a successful ride. Meeting all of these needs is the key to creating a product that is actually useful to visually impaired people.

1. SAFETY

Obstacles at drop-off points present a dangerous challenge if there is not enough info.

2. TRUST

Uncertainty, inconsistency, and opaqueness in info and service immediately erodes trust.

3. EFFICIENCY

Users look for a service that affords them agency, control, and independence.

4. RELATIONSHIPS

A personal connection is the key to making riders feel comfortable and relaxed.

The fundamentals of a smooth trip for visually impaired people are a sense of safety, trust, efficient handling of their logistics, and the relationship they have with the service provider.

Ideation & Prototyping

What IF there is no human driver?

Voice Control is Most Effective Method of Interaction

While tangible interfaces offer unique interactions and novel ideas, these are the opposite of what makes a consistent and easy-to-use experience. From our research, we knew that the key is in the information presentation. Using a matrix consisting of modalities on the y-axis and the main themes on the x-axis, we explored ideas ranging from tangible interfaces like refreshing braille, to voice control, noting which features are necessary and which questions we want the interface to answer.

USERS ALREADY USE VOICE CONTROL ON THEIR SMARTPHONES

Leveraging that interaction pattern and seamlessly transitioning it to the interior of a vehicle afford low adjustment times and learning curves.

VOICE INTERACTION CAN HAPPEN IN ANY PHYSICAL SPACE

Users don’t have to search the inside of the cabin for physical affordances.

Narrowing down the feature set and information blocks.

PROTOTYPING Voice InterACTIONS

Testing with Users Using a Wizard of Oz Script

Before building out the full conversational script, we tested the script with users in low fidelity, using a barebones script and being flexible to real-time changes and feedback from the users. We tested both a neutral and an efficient mood for the persona. Some research participants expressed the need for quick access to information, while others wanted more conversational tone.

In-person sessions inside a car

To get as close as possible to a real environment with real risks, we placed our participants in a car and ran the testing from inside.

Phone sessions

Although not as immersive as in-car environment, we ran prototype testing over the phone as the audio perception channels were the same.

Testing with users inside of car to get as close as possible to the actual scenario of use.

We also testing visually impaired users over the phone since it uses the same perception channels.

Insights from prototyping sessions

Design Needs to Consider the Entire Context of Use

Changes to weather, environment conditions, traffic, varying light conditions, and the users’ existing knowledge. Multiple routes need to be generated in the event a primary route isn’t available. Contingency plans must be readily available.

Give riders the agency to control their experience, at all levels of independence

Technological affordances need to be as salient as possible so access to information is simple

Provide easy access to escalation and intervention methods for personal and physical safety

Make route & journey information easy to access and change during the ride

Feature set and modules were finalized after incorporating user testing feedback.

Initiation module, triggered when the user gets in a car.

AVVA is designed based on user’s needs and feedback

FLEXIBILITY

Catering to most levels of vision impairment, mobility, and independence.

ADAPTABILITY

Customization to user’s preferences and needs over time, by adding to a rider profile.

RESPONSIVENESS

Addressing actual user’s pain points and struggles.

TRUST

Achieved through communication & transparency.

Productization & Roadmap

AVVA as a source of data

Road testing the prototype

The first step to productization is building a model to test on the road along a predefined route, with populated meta-data that users interact with in a gated environment.

More modules & features = more user data

With every set of modules, there is greater opportunity to collect use data and improve features. By streamlining the responses and the information being presented, we can target user’s needs and feed them useful information when they need it most.

Roadmap & production phases

The project is split into phases on implementation based on modules that need to be built, integration necessary (sensors & APIs), and the data to be collected from every section.

Roadmap and build phases for AVVA

Next Steps & Takeaways

Evangelizing our SOLUTION

Publishing our findings

The research and prototyping that we have conducted is new to the industry, so we hope to publish our findings and get it included in the next rounds of research.

Rideshare inclusion

Rideshare companies are all working to create in-car interfaces, starting with tablet devices and eventually expanding to voice interfaces. By using AVVA as part of their system, companies can create an accessible and inclusive user experience and widen their customer base.

Digital “curb cut” for all of shared autonomy

Just as most accessibility features end up being extremely useful to the broader population (for example a wheelchair accessible ramp on a sidewalk), AVVA introduces many features that can be helpful to all users.

Other Projects